Independent Researcher

Biography

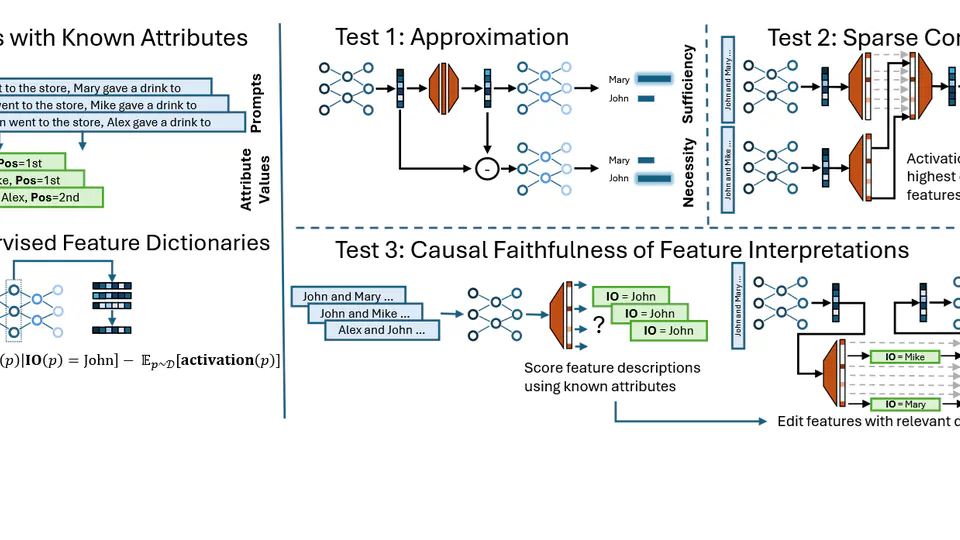

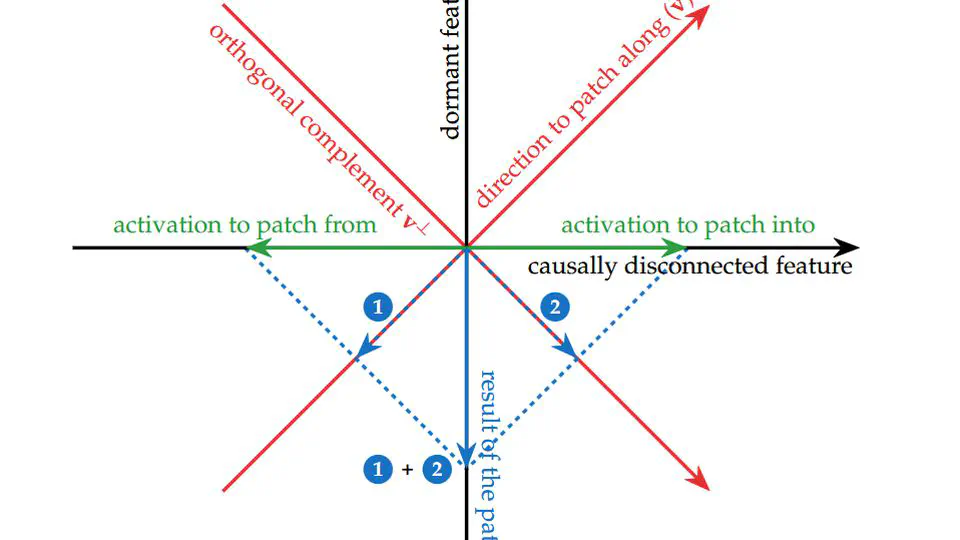

I’m an independent researcher working on Mechanistic Interpretability for LLMs. I work on circuit tracing, dictionary learning, and automated interpretability to create technology broadly useful for detecting deception, intent, and misalignment.

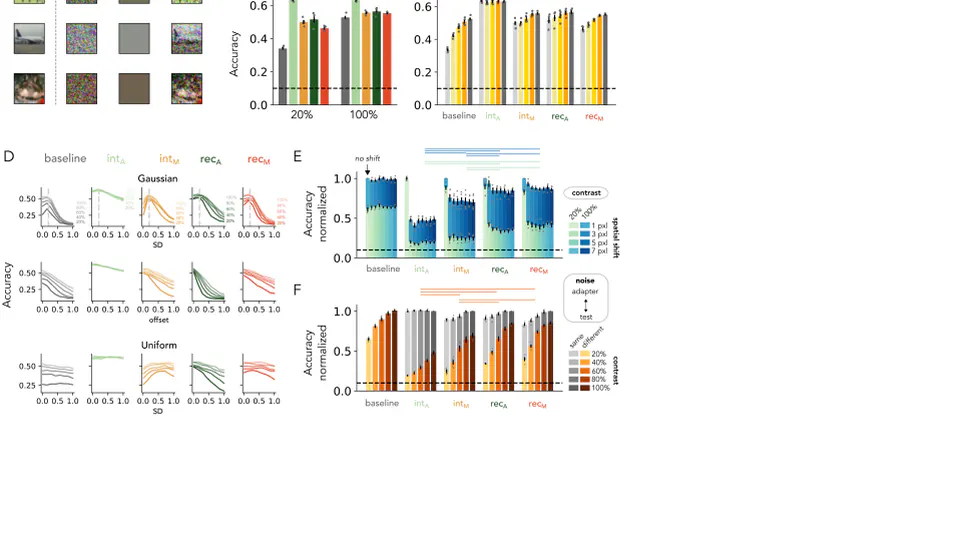

I was a MATS scholar and worked with Alex Makelov and Neel Nanda on Sparse Autoencoders and Distributed Alignment Search for feature detection and subspace activation patching. Previously, I was a MSc AI student at the University of Amsterdam, where I worked on brain-like interpretable spatiotemporal Computer Vision models, supervised by Prof Iris Groen and Amber Brands.

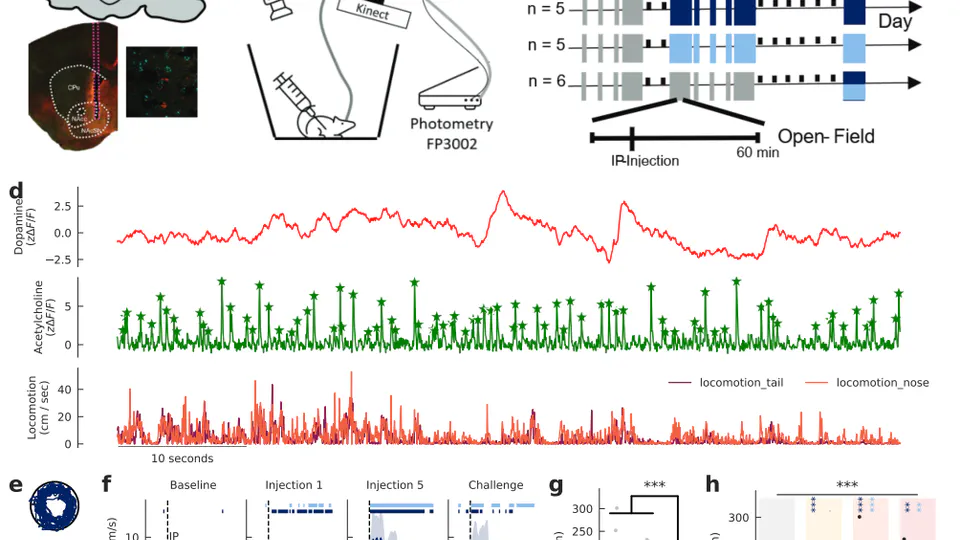

Further, I was a Fulbright student at the Graduate Center, CUNY, where I worked on Reinforcement Learning, Decision Making, and Reward Sensitization and conducted fiber photometry experiments in the Nucleus Accumbens of mice, supervised by Prof Jeff Beeler.

- Artificial Intelligence

- Mechanistic Interpretability

- Systems Neuroscience

M.Sc. Artificial Intelligence

University of Amsterdam

M.Sc. Cognitive Neuroscience

Graduate Center, City University of New York

B.Sc. IT-Systems Engineering

Hasso-Plattner-Institut, Potsdam